Hey Everyone, Welcome back to another blog where we going to discuss how can we create our own custom rules in datree. For those who don't know about Datree and how it works, you can check out my previous blog where we discussed datree in-detail.

What is Datree

For those who don't know about Datree, Datree is an open-source CLI tool for engineers to create more stable and secure Kubernetes configurations. By revealing misconfiguration in the early stages of the pipeline.

About Policy Checks

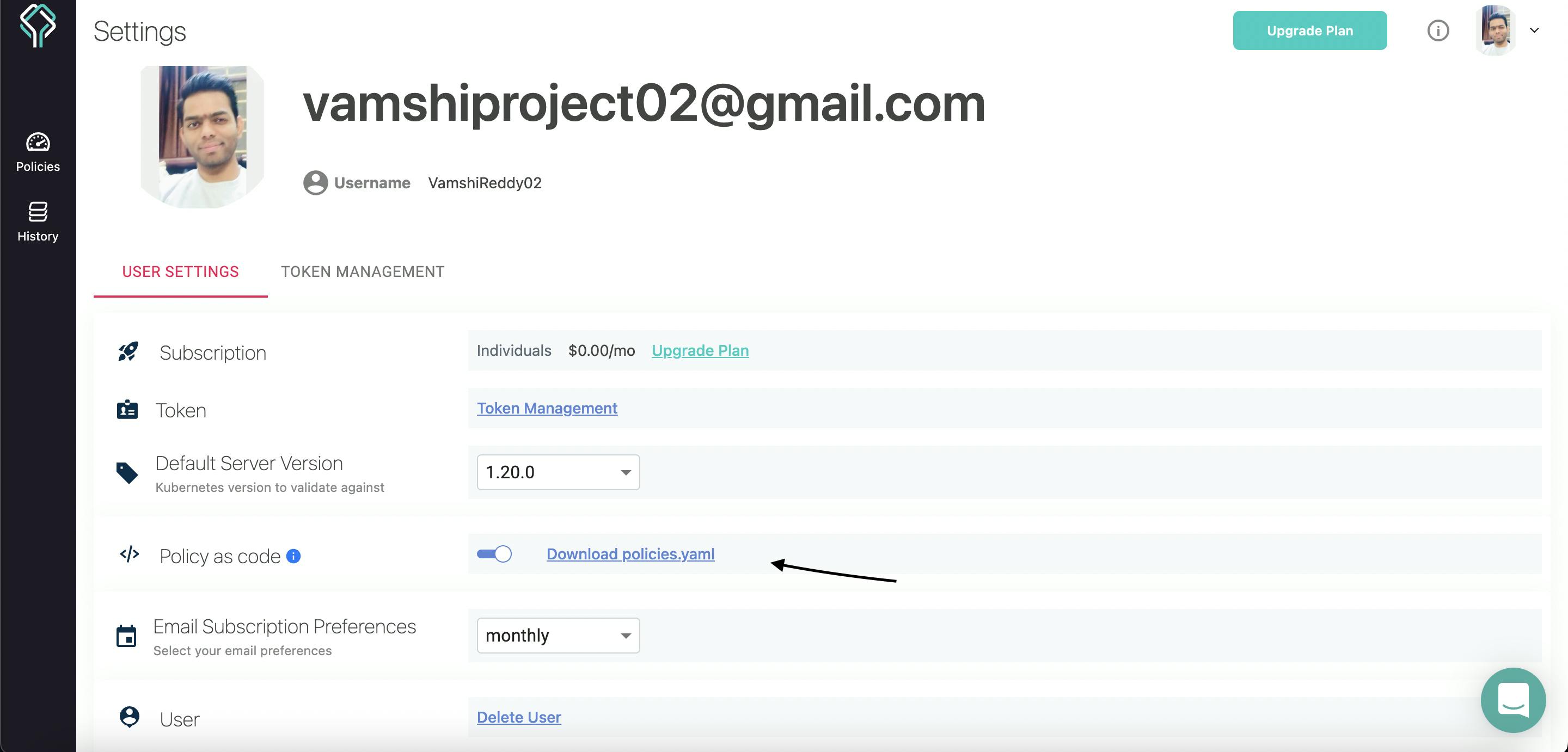

Datree offers something called policy as code. This is a declarative method to represent your policies. when this mode is ENABLED, the only way to change the policies in your account is by publishing a YAML configuration file.

Adding Our Own Rules

Since we have to add custom rules and we add rules in what policy.YAML, a policy is basically like a collection of rules. Hence in the policy.YAML file only we are going to add our rules. The custom rule engine is based on JSON Schema.

apiVersion: v1

policies:

- name: Default

isDefault: true

rules:

- identifier: CONTAINERS_MISSING_IMAGE_VALUE_VERSION

messageOnFailure: Incorrect value for key `image` - specify an image version to avoid unpleasant "version surprises" in the future

- identifier: CONTAINERS_MISSING_MEMORY_REQUEST_KEY

messageOnFailure: Missing property object `requests.memory` - value should be within the accepted boundaries recommended by the organization

- identifier: CONTAINERS_MISSING_CPU_REQUEST_KEY

messageOnFailure: Missing property object `requests.cpu` - value should be within the accepted boundaries recommended by the organization

- identifier: CONTAINERS_MISSING_MEMORY_LIMIT_KEY

messageOnFailure: Missing property object `limits.memory` - value should be within the accepted boundaries recommended by the organization

- identifier: CONTAINERS_MISSING_CPU_LIMIT_KEY

messageOnFailure: Missing property object `limits.cpu` - value should be within the accepted boundaries recommended by the organization

- identifier: INGRESS_INCORRECT_HOST_VALUE_PERMISSIVE

messageOnFailure: Incorrect value for key `host` - specify host instead of using a wildcard character ("*")

- identifier: SERVICE_INCORRECT_TYPE_VALUE_NODEPORT

messageOnFailure: Incorrect value for key `type` - `NodePort` will open a port on all nodes where it can be reached by the network external to the cluster

- identifier: CRONJOB_INVALID_SCHEDULE_VALUE

messageOnFailure: 'Incorrect value for key `schedule` - the (cron) schedule expressions is not valid and, therefore, will not work as expected'

- identifier: WORKLOAD_INVALID_LABELS_VALUE

messageOnFailure: Incorrect value for key(s) under `labels` - the vales syntax is not valid so the Kubernetes engine will not accept it

- identifier: WORKLOAD_INCORRECT_RESTARTPOLICY_VALUE_ALWAYS

messageOnFailure: Incorrect value for key `restartPolicy` - any other value than `Always` is not supported by this resource

- identifier: CONTAINERS_MISSING_LIVENESSPROBE_KEY

messageOnFailure: Missing property object `livenessProbe` - add a properly configured livenessProbe to catch possible deadlocks

- identifier: CONTAINERS_MISSING_READINESSPROBE_KEY

messageOnFailure: Missing property object `readinessProbe` - add a properly configured readinessProbe to notify kubelet your Pods are ready for traffic

- identifier: HPA_MISSING_MINREPLICAS_KEY

messageOnFailure: Missing property object `minReplicas` - the value should be within the accepted boundaries recommended by the organization

# - identifier: HPA_MISSING_MAXREPLICAS_KEY

# messageOnFailure: Missing property object `maxReplicas` - the value should be within the accepted boundaries recommended by the organization

- identifier: WORKLOAD_INCORRECT_NAMESPACE_VALUE_DEFAULT

messageOnFailure: Incorrect value for key `namespace` - use an explicit namespace instead of the default one (`default`)

Let me tell you about the structure of How can you add your own custom rules.

customRules:

- identifier:

name:

defaultMessageOnFailure:

schema:

For creating our own rules we need a certain amount of tags. let's discuss those tags:

identifier: this is a unique ID that is associated with your policy

name: title when the rule fails

defaultMessageOnFailure: this shows the message on the screen when a particular rule fails.

schema: here is where our rule logic resides in the form of JSON or YAML.

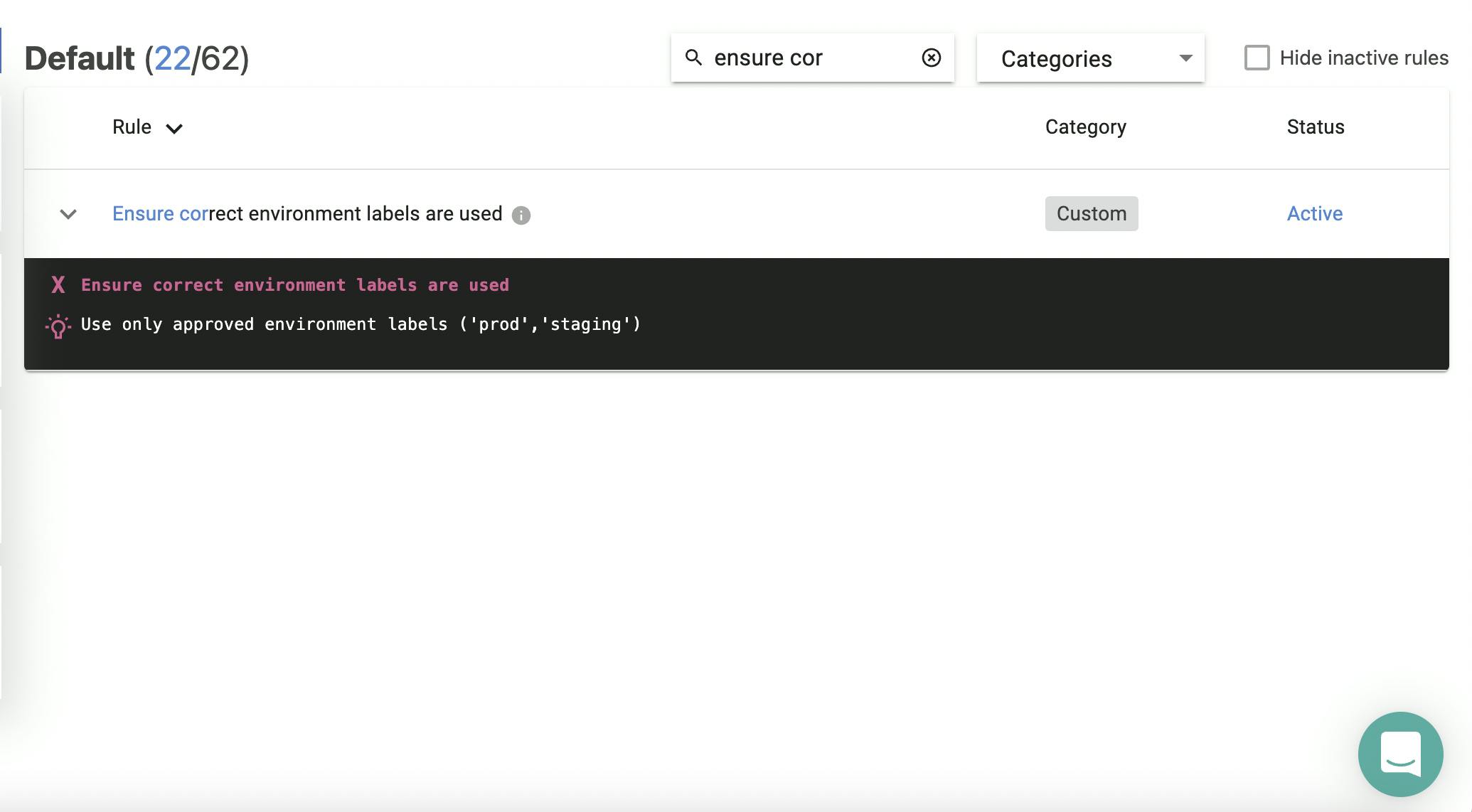

Example: Ensure correct environment labels are used

let us make a custom rule that ensures correct environment labels are used. As you can see below, we used 4 tags i.e, identifier, name, defaultMessageOnFailure and schema.

customRules:

- identifier: CUSTOM_INCORRECT_ENVIRONMENT_LABELS

name: Ensure correct environment labels are used

defaultMessageOnFailure: Use only approved environment labels ('prod','staging','test')

schema:

properties:

metadata:

properties:

labels:

properties:

environment:

enum:

- prod

- staging

required:

- environment

required:

- labels

Reference this rule in our policy which can be done by copying the identifier and pasting it under

rules as you can see below.

NOTE: As you can see messageOnFailure is empty, when messageOnFailure is empty then datree uses defaultMessageOnFailure in its place.

apiVersion: v1

policies:

- name: Default

isDefault: true

rules:

- identifier: CUSTOM_INCORRECT_ENVIRONMENT_LABELS

messageOnFailure: ''

- identifier: CONTAINERS_MISSING_IMAGE_VALUE_VERSION

messageOnFailure: Incorrect value for key `image` - specify an image version to avoid unpleasant "version surprises" in the future

- identifier: CONTAINERS_MISSING_MEMORY_REQUEST_KEY

messageOnFailure: Missing property object `requests.memory` - value should be within the accepted boundaries recommended by the organization

- identifier: CONTAINERS_MISSING_CPU_REQUEST_KEY

messageOnFailure: Missing property object `requests.cpu` - value should be within the accepted boundaries recommended by the organization

- identifier: CONTAINERS_MISSING_MEMORY_LIMIT_KEY

messageOnFailure: Missing property object `limits.memory` - value should be within the accepted boundaries recommended by the organization

- identifier: CONTAINERS_MISSING_CPU_LIMIT_KEY

messageOnFailure: Missing property object `limits.cpu` - value should be within the accepted boundaries recommended by the organization

- identifier: INGRESS_INCORRECT_HOST_VALUE_PERMISSIVE

messageOnFailure: Incorrect value for key `host` - specify host instead of using a wildcard character ("*")

- identifier: SERVICE_INCORRECT_TYPE_VALUE_NODEPORT

messageOnFailure: Incorrect value for key `type` - `NodePort` will open a port on all nodes where it can be reached by the network external to the cluster

- identifier: CRONJOB_INVALID_SCHEDULE_VALUE

messageOnFailure: 'Incorrect value for key `schedule` - the (cron) schedule expressions is not valid and, therefore, will not work as expected'

- identifier: WORKLOAD_INVALID_LABELS_VALUE

messageOnFailure: Incorrect value for key(s) under `labels` - the vales syntax is not valid so the Kubernetes engine will not accept it

- identifier: WORKLOAD_INCORRECT_RESTARTPOLICY_VALUE_ALWAYS

messageOnFailure: Incorrect value for key `restartPolicy` - any other value than `Always` is not supported by this resource

- identifier: CONTAINERS_MISSING_LIVENESSPROBE_KEY

messageOnFailure: Missing property object `livenessProbe` - add a properly configured livenessProbe to catch possible deadlocks

- identifier: CONTAINERS_MISSING_READINESSPROBE_KEY

messageOnFailure: Missing property object `readinessProbe` - add a properly configured readinessProbe to notify kubelet your Pods are ready for traffic

- identifier: HPA_MISSING_MINREPLICAS_KEY

messageOnFailure: Missing property object `minReplicas` - the value should be within the accepted boundaries recommended by the organization

After making all the changes, save the YAML file. Now go to Terminal and type this command:

$ datree publish policies.yaml

Published successfully

As you can see below, the environment is set as a 'test' which violates our custom rule.

apiVersion: apps/v1

kind: Deployment

metadata:

name: rss-site

namespace: test

labels:

owner: --

environment: test

app: web

spec:

replicas: 6

selector:

matchLabels:

app: web

template:

metadata:

namespace: test

labels:

app: we

spec:

containers:

- name: front-end

image: nginx:latest

readinessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 5

periodSeconds: 10

resources:

requests:

memory: "64Mi"

cpu: "500m"

limits:

cpu: "500m"

ports:

- containerPort: 80

- name: rss-reader

image: datree/nginx@sha256:45b23dee08af5e43a7fea6c4cf9c25ccf269ee113168c19722f87876677c5cb2

livenessProbe:

httpGet:

path: /healthz

port: 8080

httpHeaders:

- name: Custom-Header

value: Awesome

readinessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 5

periodSeconds: 10

resources:

requests:

cpu: "64m"

memory: "128Mi"

limits:

memory: "128Mi"

ports:

- containerPort: 88

Now type this command to run the test: datree test ~/.datree/k8s-demo.yaml

>> File: ../.datree/k8s-demo.yaml

[V] YAML validation

[V] Kubernetes schema validation

[X] Policy check

❌ Ensure each container image has a pinned (tag) version [1 occurrence]

- metadata.name: rss-site (kind: Deployment)

💡 Incorrect value for key `image` - specify an image version to avoid unpleasant "version surprises" in the future

❌ Ensure each container has a configured liveness probe [1 occurrence]

- metadata.name: rss-site (kind: Deployment)

💡 Missing property object `livenessProbe` - add a properly configured livenessProbe to catch possible deadlocks

❌ Ensure each container has a configured memory limit [1 occurrence]

- metadata.name: rss-site (kind: Deployment)

💡 Missing property object `limits.memory` - value should be within the accepted boundaries recommended by the organization

❌ Ensure correct environment labels are used [1 occurrence]

- metadata.name: rss-site (kind: Deployment)

💡 Use only approved environment labels ('prod','staging')

❌ Ensure workload has valid label values [1 occurrence]

- metadata.name: rss-site (kind: Deployment)

💡 Incorrect value for key(s) under `labels` - the vales syntax is not valid so the Kubernetes engine will not accept it

(Summary)

- Passing YAML validation: 1/1

- Passing Kubernetes (1.20.0) schema validation: 1/1

- Passing policy check: 0/1

+-----------------------------------+-----------------------+

| Enabled rules in policy "Default" | 22 |

| Configs tested against policy | 1 |

| Total rules evaluated | 22 |

| Total rules skipped | 0 |

| Total rules failed | 5 |

| Total rules passed | 17 |

| See all rules in policy | https://app.datree.io |

+-----------------------------------+-----------------------+

whoo-ho!! Our custom rule is visible on the screen.

All our custom rules are updated in our dashboard instantly, how cool it is!!!!

Example: Make sure the correct number of replicas are running

Let us create another custom rule that only allows the number of running replicas between 1-5.

customRules:

- identifier: CUSTOM_POLICY_REPLICAS

name: Make sure correct number of replicas are running

defaultMessageOnFailure: Make sure running replicas are between 1 - 5

schema:

if:

properties:

kind:

enum:

- Deployment

then:

properties:

spec:

properties:

replicas:

minimum: 1

maximum: 5

required:

- replicas

Reference this rule in our policy which can be done by copying the identifier and pasting it under

rules as you can see below.

apiVersion: v1

policies:

- name: Default

isDefault: true

rules:

- identifier: CUSTOM_POLICY_REPLICAS

messageOnFailure: ''

save the YAML file. Now go to Terminal and type this command:

$ datree publish policies.yaml

As you can below, the file contains 6 replicas which violates our rule.

apiVersion: apps/v1

kind: Deployment

metadata:

name: rss-site

namespace: test

labels:

owner: --

environment: test

app: web

spec:

replicas: 6

selector:

matchLabels:

app: web

template:

metadata:

namespace: test

labels:

app: we

spec:

containers:

- name: front-end

image: nginx:latest

readinessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 5

periodSeconds: 10

resources:

requests:

memory: "64Mi"

cpu: "500m"

limits:

cpu: "500m"

ports:

- containerPort: 80

- name: rss-reader

image: datree/nginx@sha256:45b23dee08af5e43a7fea6c4cf9c25ccf269ee113168c19722f87876677c5cb2

livenessProbe:

httpGet:

path: /healthz

port: 8080

httpHeaders:

- name: Custom-Header

value: Awesome

readinessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 5

periodSeconds: 10

resources:

requests:

cpu: "64m"

memory: "128Mi"

limits:

memory: "128Mi"

ports:

- containerPort: 88

Now type this command to run the test: datree test ~/.datree/k8s-demo.yaml

>> File: ../.datree/k8s-demo.yaml

[V] YAML validation

[V] Kubernetes schema validation

[X] Policy check

❌ Ensure each container image has a pinned (tag) version [1 occurrence]

- metadata.name: rss-site (kind: Deployment)

💡 Incorrect value for key `image` - specify an image version to avoid unpleasant "version surprises" in the future

❌ Ensure each container has a configured liveness probe [1 occurrence]

- metadata.name: rss-site (kind: Deployment)

💡 Missing property object `livenessProbe` - add a properly configured livenessProbe to catch possible deadlocks

❌ Ensure each container has a configured memory limit [1 occurrence]

- metadata.name: rss-site (kind: Deployment)

💡 Missing property object `limits.memory` - value should be within the accepted boundaries recommended by the organization

❌ Make sure correct number of replicas are running [1 occurrence]

- metadata.name: rss-site (kind: Deployment)

💡 Make sure running replicas are between 1 - 5

❌ Ensure workload has valid label values [1 occurrence]

- metadata.name: rss-site (kind: Deployment)

💡 Incorrect value for key(s) under `labels` - the vales syntax is not valid so the Kubernetes engine will not accept it

(Summary)

- Passing YAML validation: 1/1

- Passing Kubernetes (1.20.0) schema validation: 1/1

- Passing policy check: 0/1

+-----------------------------------+-----------------------+

| Enabled rules in policy "Default" | 23 |

| Configs tested against policy | 1 |

| Total rules evaluated | 23 |

| Total rules skipped | 0 |

| Total rules failed | 5 |

| Total rules passed | 18 |

| See all rules in policy | https://app.datree.io |

+-----------------------------------+-----------------------+

TA-DA!! Our custom rule is visible on the screen.